AI has its own language—and half the time, it sounds like it was written by a robot. Words like token, hallucination, and transparency get tossed around in meetings, press releases, and product pages as if everyone already knows their meaning. But for writers, editors, project managers, and content strategists, clarity starts with understanding AI terminology.

In a recent Wall Street Journal piece, “I’ve Seen How AI ‘Thinks.’ I Wish Everyone Could” (Oct 18-19, 2025), John West describes the high-stakes race to incorporate AI technology into all kinds of products without truly understanding how it works. He quotes the laughably broad definition of AI from Sam Altman, CEO of OpenAI: “highly autonomous systems that outperform humans at most economically viable work.” Then West explains how this definition aptly describes his washing machine, “which outperforms my human ability to remove stains and provides vast economic value.”

The challenge with defining anything AI is that we are humans living within our human context. Another challenge is that some terms have overlapping meanings.

I ran into this last challenge when I was asked by the hosts of Coffee and Content to describe the difference among the terms responsible AI, trustworthy AI, and ethical AI. See my response in the first video clip on my website’s Speaking page. There might be a distinction there without a true difference.

This post offers a guide—an AI glossary to the most common terms you’re likely to see (and maybe use). You don’t need a computer science degree — just curiosity and a desire to communicate responsibly about technology that’s reshaping our work.

To make it easier to navigate, I’ve grouped the terms into seven categories:

- Categories of AI – the broad types of systems and approaches

- Architecture of AI – how current AI systems work

- Characteristics of AI – what makes an AI system trustworthy and usable

- Data Related to AI – how data for and in AI is described

- Performance of AI – types of glitches in AI’s function, use, and output

- Principled AI Categories – the ethics and governance frameworks that guide responsible use

- Use-Related Terms – how AI can be applied in real-world contexts

- Prompting AI – approaches to using prompts to interact with AI

Whether you’re editing a white paper, explaining AI to stakeholders, or just trying to keep your buzzwords straight, this glossary is meant to help you turn AI talk into human meaning. Each entry includes the source for the definition. See the full list of references in the final section of this post.

Categories and Types of AI

Not all AI is built—or behaves—the same way. Some systems generate text or images, others make predictions, and a few can even take independent actions. Understanding these categories helps us separate the realistic from the futuristic, the technical from the buzz.

The terms in this section describe the major types of AI systems you’ll encounter, from narrow and predictive tools that quietly power everyday products to agentic and general-purpose systems that push toward autonomy. Knowing where each fits gives writers, editors, and project managers a clearer sense of what today’s AI can and can’t do.

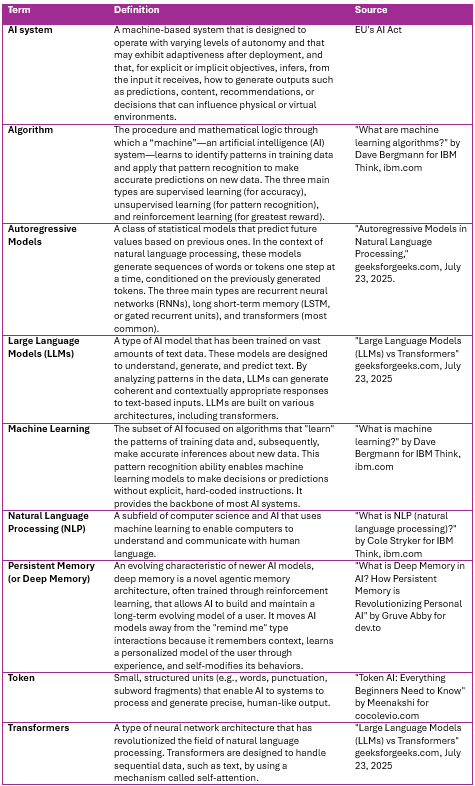

Architecture of AI

Behind every AI system is an architecture—a mix of models, methods, and moving parts that make the magic happen. Terms such as algorithm, machine learning, and transformer describe how AI systems process information. Others, such as token, deep memory, and autoregressive models, explain how they handle language and context. Understanding these building blocks helps demystify the technology and reminds us that even the most advanced AI still runs on human-designed frameworks and logic. Note that when we talk about an “AI model,” we often mean an algorithm.

Characteristics of Trustworthy AI Systems

A “trustworthy” AI system has defining traits that reveal how it works, how reliable it is, and how responsibly it’s used. Some of these characteristics are technical—such as accuracy, robustness, and reliability—while others are ethical and human-centered—such as fairness, privacy, and transparency. Understanding these qualities helps communicators, editors, and project managers explain not just what an AI system does, but how it earns (or loses) our trust.

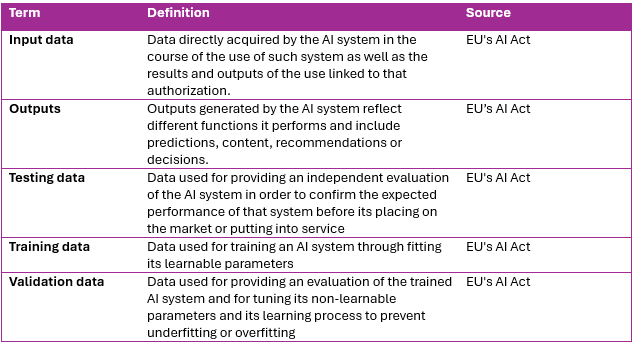

Data Related to AI

Every AI system lives and dies by its data. The information used to train, test, and validate a model determines how well it performs—and how fairly it behaves. Understanding the types of data involved in an AI system helps us see where quality, bias, and accountability come into play. Each step in the data lifecycle shapes what the system learns, how it responds, and how much we can trust its results.

Performance Issues with AI

When we talk about how well an AI system works, we’re really talking about how well it performs under real conditions. Performance measures go beyond speed or accuracy—they tell us whether the system is reliable, efficient, and fair when faced with new data or unexpected inputs. Understanding terms like data leakage, hallucination, drift, and deep fake helps communicators explain not just what AI gets right, but also where it can stumble.

Principled AI Use Categories

Ethical and responsible AI doesn’t happen by accident—it’s guided by principles that shape how systems are designed, deployed, and governed. Frameworks from organizations such as UNESCO, the EU’s AI Act, and NIST outline key categories, including trustworthy, responsible, and ethical AI. These principles help ensure that AI serves people, protects rights, and earns public confidence. Understanding the language of principled AI use gives communicators and project leaders the vocabulary to discuss not just what AI can do, but what it should do.

Use-Related AI Terms

The way we use AI—and the way AI uses us—depends on the systems, safeguards, and habits we build around it. These terms describe how humans interact with AI tools, how organizations manage risk, and how professionals stay accountable for outcomes. From privacy-enhancing technologies to retrieval-augmented generation, understanding these concepts helps us make smarter choices about when to rely on automation, when to step in, and how to keep our work authentic in an AI-driven world.

Prompting AI

Interacting with AI means having a conversation: what you get back depends on what you say. The field of prompting has quickly evolved into its own discipline, with techniques that help users guide AI systems toward clearer, more accurate, and more useful results. Terms like prompt engineering, prompt chaining, and prompt strategies describe different ways to shape an AI model’s responses, while concepts like prompt formulas, prompt libraries, and prompt patterns help users work more consistently and efficiently. Understanding these tools turns prompting from guesswork into a skill—one that empowers you to collaborate with AI, not just query it.

AI isn’t magic—it’s language, logic, and a lot of human choices. The more clearly we understand the words around it, the better we can shape the technology to serve real needs. Whether you’re writing documentation, editing marketing copy, or leading a project that uses AI, knowing the difference between a model and a mindset keeps your work grounded in meaning, not mystery.

Clear terminology leads to clear thinking—and clear thinking leads to trustworthy communication. So the next time someone drops a buzzword in a meeting, you’ll have the confidence to pause, translate, and turn AI talk into human meaning.

Interested in reading more about a communicator’s perspective on generative AI? Check out some of my recent blog posts:

✅ AI Prompting for Bloggers: My Trial-and-Error Discoveries

✅ Leveling an Editorial Eye on AI

✅ Safeguarding Content Against AI Slop

✅ Agent vs Agency in GenAI adoption: Framing Ethical Governance

✅ Ethical Use of GenAI: 10 Principles for Technical Communicators

✅ A New Code for Communicators: Ethics for an Automated Workplace

✅ GenAI in Professional Settings: Adoption Trends and Use Cases

✅ Designing Content for AI Summaries: A Practical Guide for Communicators

References

Abby, Gruve. “What Is Deep Memory in AI? How Persistent Memory Is Revolutionizing Personal AI.” September 21, 2025. dev.to.

Aronson, Samuel. “Research Spotlight: Generative AI ‘Drift’ and ‘Nondeterminism’ Inconsistencies Are Important Considerations in Healthcare Applications.” August 13, 2024. massgeneralbrigham.org.

Bergmann, Dave. “What are machine learning algorithms?” IBM Think. ibm.com.

Bergmann, Dave. “What is machine learning?” IBM Think. ibm.com.

Britannica. (Unsigned webpage.) “cognitive bias.” britannica.com.

Dilmegani, Cem and Sila Ermut. “Handle AI Ethics Dilemmas with Frameworks & Tools.” October 10, 2025. research.aimultiple.com.

European Union Parliament and the Council of the European Union. Regulation (EU) 2024/1689 (Artificial Intelligence Act). June 13, 2024.

Gadesha, Vrunda. “What is prompt engineering?” IBM Think. ibm.com.

Geeks for Geeks. (Unsigned webpage.) “Autoregressive Models in Natural Language Processing.” July 23, 2025. geeksforgeeks.org.

Geeks for Geeks. (Unsigned webpage.) “Large Language Models (LLMs) vs Transformers.” July 23, 2025. geeksforgeeks.org.

Geeks for Geeks. (Unsigned webpage.) “What is Prompt Engineering?” July 14, 2025. geeksforgeeks.org.

Gomstyn, Alice. “What is trustworthy AI?” IBM Think. ibm.com

Hilliard, Airlie. “What is Ethical AI?” July 4, 2023. holisticai.com.

IBM Data and AI Team. “Understanding the different types of artificial intelligence.” IBM Think. ibm.com.

Kamran, Aama. “What is RAG in AI—A Comprehensive Guide.” December 6, 2024. metaschool.so.

Makadia, Hardik. “Agentic AI vs Generative AI: Key Differences Explained.” July 10, 2025. wotnot.io.

Marechal, Cyril. “Human-In-The-Loop: What, How, and Why.” devoteam.com.

McKinsey & Company. (Unsigned article.) “What is artificial general intelligence?” March 21, 2024. mckinsey.com.

Meenakshi. “Token AI” Everything Beginners Need to Know.” May 20, 2025. cocolevio.com.

Microsoft. (Unsigned webpage.) “Generative AI vs. Other AI Types.” microsoft.com.

ModelOp. (Unsigned webpage.) “Traceability.” modelop.com.

Mucci, Tim. “What is predictive AI?” IBM Think. ibm.com.

Mucci, Tim. “Five open-source AI tools to know.” IBM Think. ibm.com.

National Institute of Standards and Technology. “Artificial Intelligence Risk Management Framework.” January 2023. nvlpubs.nist.gov.

Niederhoffer, Katie, Gabriella Rosen Kellerman, Angela Lee, Alex Liebscher, Kristina Rapuano, and Jeffrey T. Hancock. “AI-Generated ‘Workslop’ Is Destroying Productivity.” Harvard Business Review. September 22, 2025. hbr.org.

Oleksandra. “A Guide to Responsible AI: Best Practices and Examples.” August 9, 2024. codica.com.

Project Management Institute (PMI). “Talking to AI: Prompt Engineering for Project Managers.” (An online course.) pmi.org.

Stryker, Cole, and Jim Holdsworth. “What is Natural Language Processing (NLP)?” IBM Think. ibm.com.

Sustainability Directory. (Unsigned webpage.) “Sustainable Artificial Intelligence.” climate-sustainability-directory.com.

Tal, Rotem. “AI data leakage: Is your AI assistant spilling your secrets?” August 11, 2024. guard.io.

Trend, Alice, Liming Zhu, and Qinghua Lu. “Good AI, bad AI: decoding responsible artificial intelligence.” November 23, 2023. csiro.au.

Wikipedia. “Hallucination (artificial intelligence).” en.wikipedia.org.

(Image by Nicky ❤️🌿🐞🌿❤️ from Pixabay)

Discover more from DK Consulting of Colorado

Subscribe to get the latest posts sent to your email.

Excellent article.

Kit Brown-Hoekstra Principal, Comgenesis, LLC kit.brown@comgenesis.com kit.brown@comgenesis.com +44 7417 506345 (UK mobile) +1 303.243.4452 (US mobile) (GMT, Cardiff, Wales; GMT-7, Mountain Time Zone)

Practice random acts of kindness and senseless beauty.

>

Thanks, Kit!